WanderPal

Over 1.1 billion people worldwide were living with some form of visual impairment, including 43 million who were completely blind. These individuals face significant challenges in independent mobility and social participation. This project explores how to create a human AI collaboration wearable assistive system that not only helps with navigation but also empowers blind users to maintain independence, dignity, and confidence in complex, real-world environments.

Role / Skills

Human-Centered AI

Product/UX Design

User Research

Human AI Collaboration

Computer Vision

Interdisplinary Collaboration

Team

1 Designer

1 Software

2 Hardware

Timeline

1 months for research, 1 month for MVP, with 2 months of continued iteration

Tools Used

Figma

Fusion 360

Yolov8

The big problem

Blind and visually impaired individuals struggle with safe, independent navigation due to noisy, privacy-risking, and non-adaptive tools, leading to reduced confidence and social exclusion.

Limited independence when navigating unfamiliar or complex environments.

Over-reliance on auditory navigation tools, which can overload users in noisy environments.

Privacy concerns with camera-based systems that unintentionally capture faces or sensitive surroundings.

Existing devices often lack adaptability to users’ personal preferences or grip styles with canes.

As a result, many users report reduced confidence, safety concerns, and social exclusion.

Our Goal

The goal was to design an assistive device that:

Create a transportation system that:

- Reliably detects navigation markers (e.g., curbs, crosswalks).

- Provides intuitive, discreet feedback without increasing cognitive load.

- Maintains lightweight, modular design suitable for integration with the white cane or wearable accessories.

Contextual Research

Auditory Simulation Study

I developed an immersive audio-based simulation to observe how blind participants interacted with environmental cues. The participants could choose when to request navigation prompts, revealing preferences for autonomy and selective assistance.

Key Findings:

Users valued existing environmental cue including traffic lights as guide for direction.

visually impaired community really relied on existing navigation tools for dialy

Design Strategies

Provide reliable navigation markers rather than step-by-step directions.

Address privacy by restricting cameras to ground-level targets.

Give users control over when and how feedback is delivered.

Tactile Feedback

Reduces auditory overload in noisy environments.

Provides immediate, intuitive cues (e.g., directional vibrations).

Offers discreet interaction, preserving user privacy and dignity in public.

Solution

We propose an assistive device solution that uses a camera to detect navigation markers and werable deliver real-time tactile feedback. By emphasizing reliable cues over step-by-step instructions, it reduces auditory overload and enables blind users to navigate with greater independence and confidence.

Challenge 1: Flexibility with Safty anf

Reliable ground-level detection paired with tactile feedback enables confident, independent travel without overwhelming users.

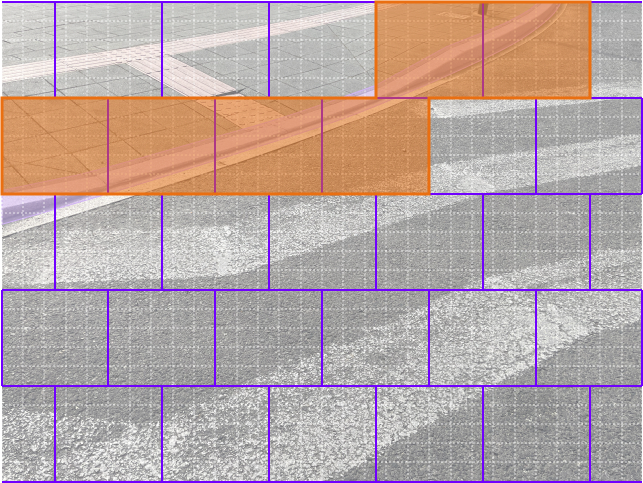

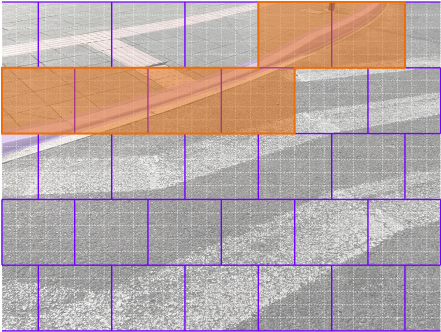

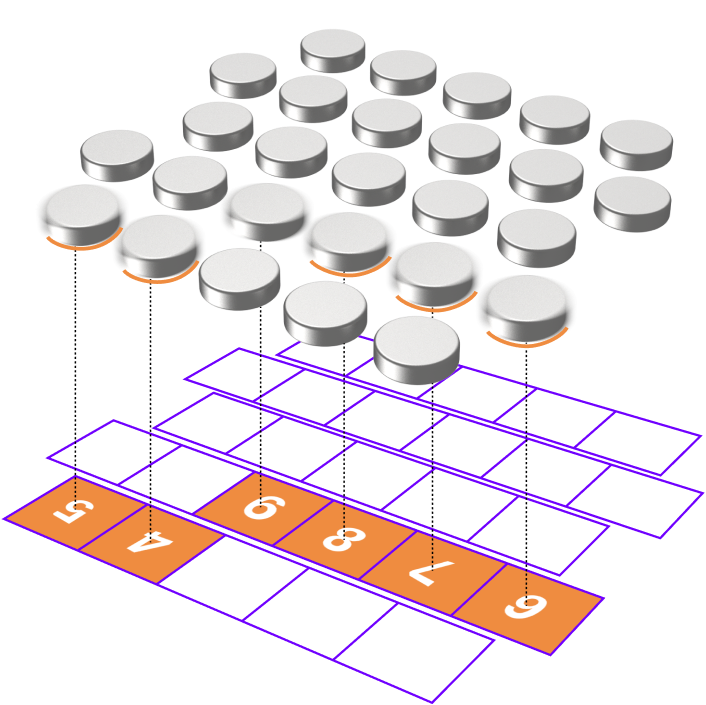

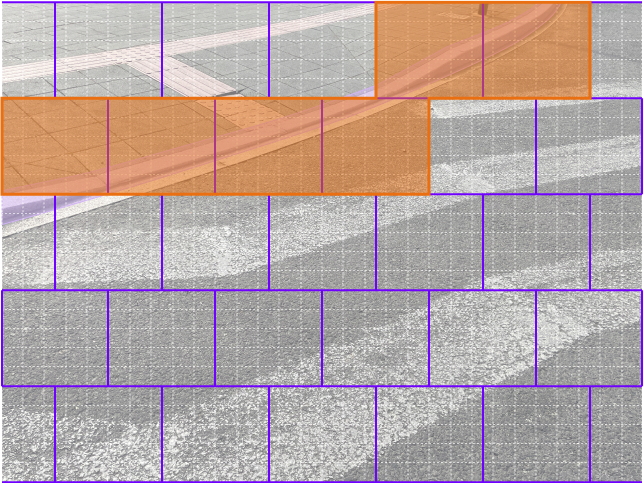

We overlaid a grid on the camera feed and mapped each zone to a vibration motor. When a navigation target appeared, the corresponding motor vibrated, creating a direct spatial link between visual cues and tactile feedback.

The motors corresponding to the orange area vibrate

7mm

2mm

Why Tactile Feedback?

We chose tactile feedback because it introduces an underutilized sensory channel into human–computer interaction. While vision and hearing dominate assistive technologies, touch offers a discreet, intuitive, and immediate way to convey spatial information. For blind users, it reduces auditory strain in noisy environments, protects privacy compared to voice prompts, and provides real-time cues for quick decision-making.

Future Improvement Directions

From the first prototype, we learned that clarity and comfort are critical—vibrations must be distinct, well-positioned, and adaptable to individual users. These insights point to the need for a more flexible and modular system, where the glove and cane can be adjusted for different grip styles, hand sizes, and personal preferences. This evolution led us to design a modular glove-cane system that empowers users with greater control over how and when feedback is delivered.

Solution

To provide discreet and intuitive guidance, I focused on developing a vibration module that could deliver clear cues without adding to the auditory load that blind users already manage in daily navigation. Early attempts to embed a vibration grid into the cane handle proved confusing, as signals shifted with grip variations and alignment. Moving the module to the back of the hand through a glove revealed a more natural solution: vibrations pressed directly against the skin offered immediate, private, and easily interpretable feedback. Iterations with fabric fit, array boundaries, and structural supports led to a final system that pairs a cane-mounted camera for environmental detection with a glove-based motor array for tactile feedback. This discreet combination allows users to receive navigation cues in real time without disrupting their reliance on auditory landmarks, balancing clarity with privacy to create a more intuitive and socially acceptable form of guidance.

Challenge 1: Flexibility with Safty anf

Tactile feedback replaces constant audio prompts, reducing cognitive load and preserving awareness in noisy environments.

Cane Handle Integration

Problems Identified:

- A 6×6 vibration grid aligns at an angle to the cane, confusing curb cues.

- Palm alignment doesn’t accommodate both vertical and diagonal grip styles.

- Uneven handle surface makes module installation difficult.

Insights:

- Feedback placement must adapt to different cane grip methods.

- Direct integration into the cane limits flexibility and can introduce misalignment issues.

- Users need consistent tactile orientation that doesn’t depend on how they hold the cane.

Outcome: Cane-handle integration was abandoned. Shifted toward a wearable (glove) solution for clearer, more flexible tactile delivery.

Improve motor-to-handback fit

Change motor–hand contact from surface to point

Integrate the motors into the fabric through weaving

Weave the motors into the fabric

Conclusion: Ensuring the motors fit snugly against the back of the hand is key to improving the clarity of vibration perception; the fabric between the motors and the hand should be thinner.

Next Steps:

- Improve the glove’s fit with the back of the hand so it can adapt to most hand sizes.

- Provide perceptible boundaries for the motor array.

Clarity of Motor Array Feedback

Findings:

- Motors must fit snugly against the back of the hand; thinner fabric improves clarity.

Problems:

- Signals unclear with loose fit or thick material.

Insights: Physical contact quality (fit + material) is as important as motor strength.

Exploration 2: How can the glove fit closely to the back of the hand?

Problems:

- The glove cannot adapt to different hand sizes (on smaller hands it feels loose).

- It is difficult to clearly determine which motor is vibrating.

- Users cannot easily identify the boundaries of the motor array.

Loose fit on smaller hands, unclear motor boundaries, difficult to distinguish which motor vibrates.

Insights:

- Elastic fabric alone cannot solve fit variation — structure is needed.

- Users rely on perceivable boundaries to map vibration locations.

- Placement and ergonomics strongly affect interpretability of feedback.

Outcome: → Established that glove-based wearables deliver more intuitive feedback than cane-handle integration. Key lesson: ergonomic adaptability and clear motor boundaries are critical to effective tactile feedback.

Challenge 1: Flexibility with Safty anf

A modular glove-cane system lets users control how and when feedback is delivered, supporting diverse grip styles and preferences.

Cane Handle Intergration

Add a rigid frame and use a custom PCB.

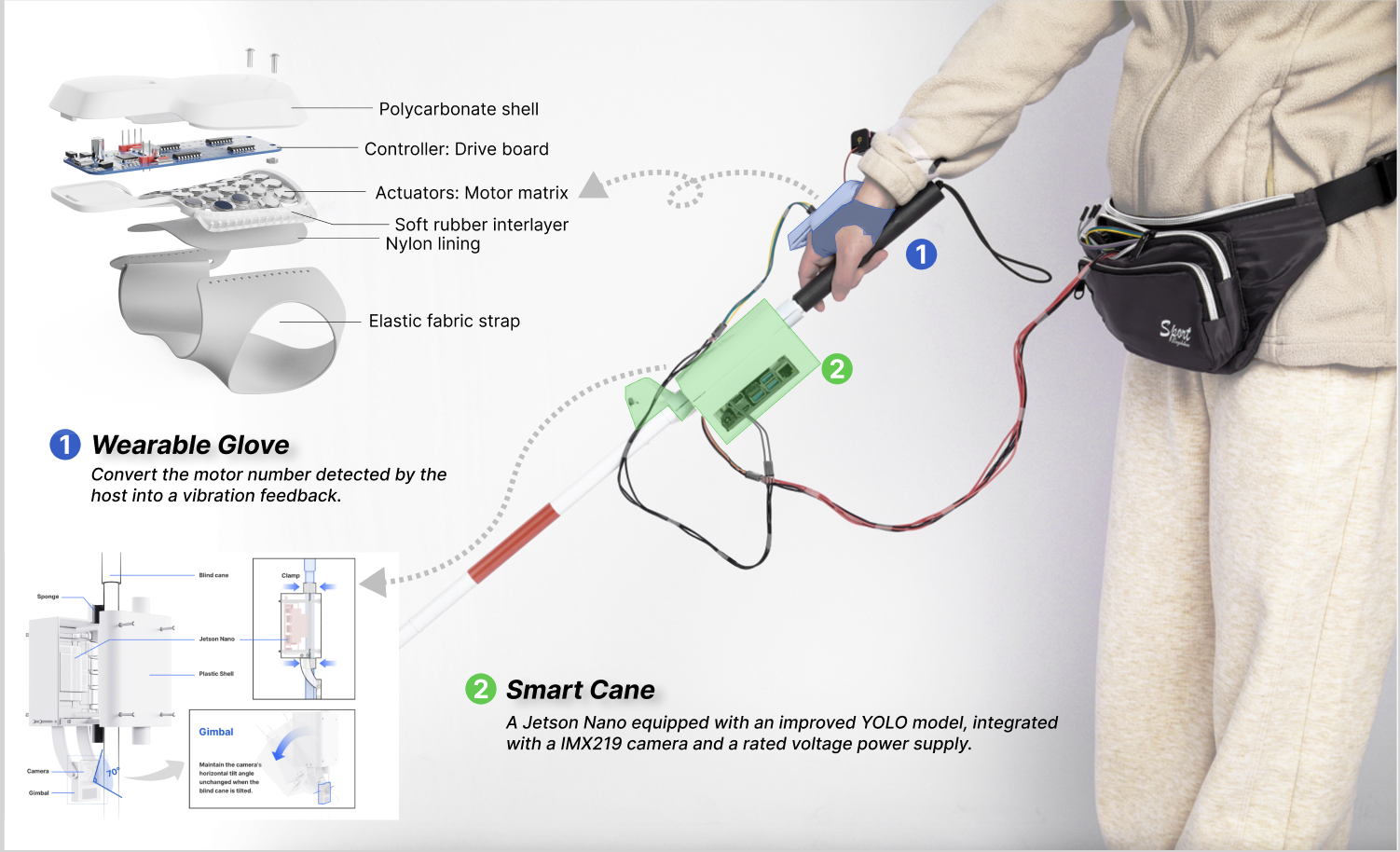

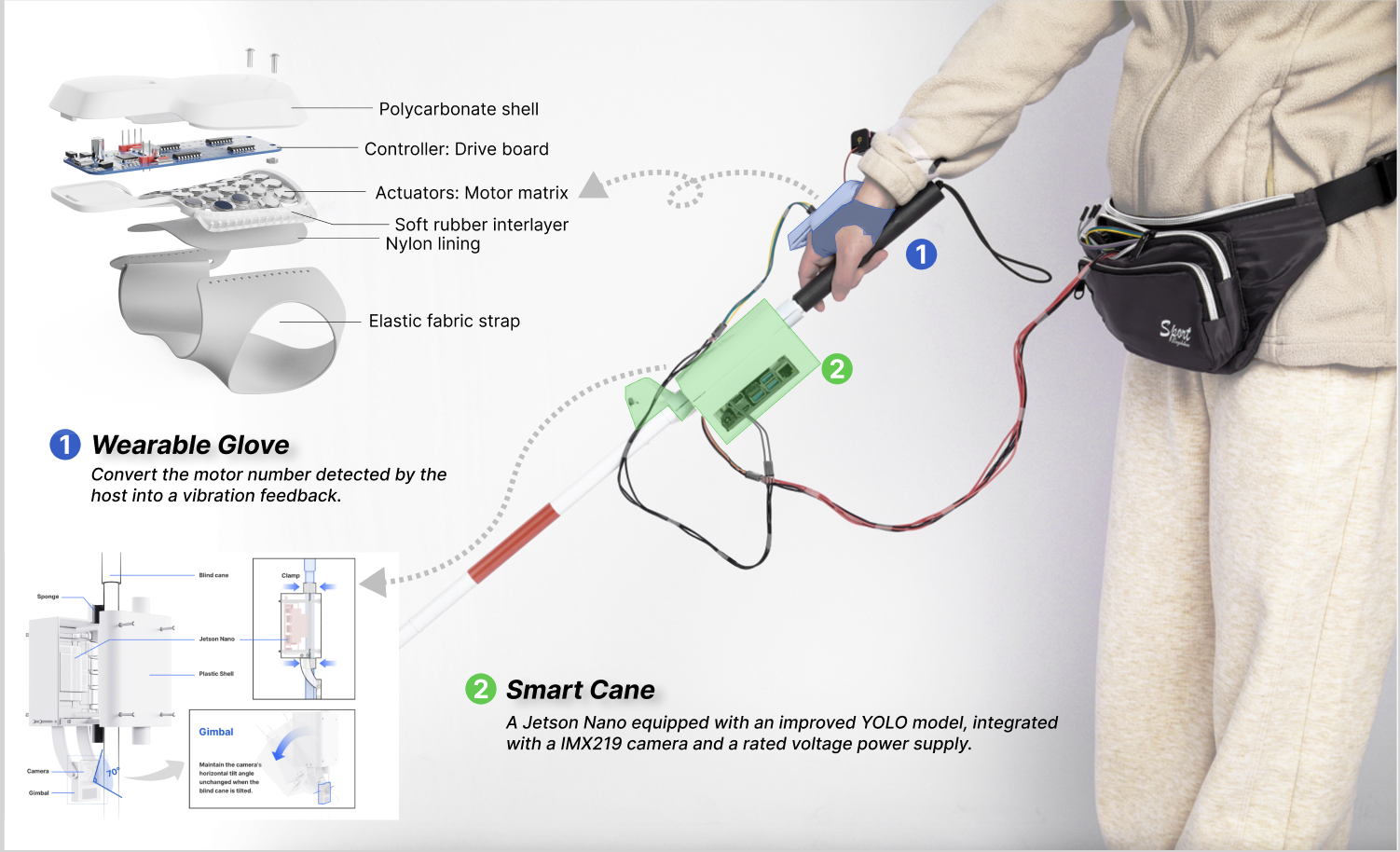

Final Version

Design:

- Glove with vibration motor array for tactile feedback.

- Camera module attached to cane to detect navigation cues.

Problems with Final Prototype:

- Rigid PCB support structure reduced comfort and adaptability.

- Not waterproof, limiting outdoor usability.

Insights:

- Modularity (separating sensing from feedback) provides flexibility and clarity.

- Outdoor usability requires robustness (waterproofing, stronger structures).

- Combining familiar tools (white cane) with wearable tech lowers learning curve and preserves user independence.

Outcome: → Final solution combines glove + cane-mounted camera, providing discreet tactile guidance while maintaining independence.

Final Version

The final prototype integrates a glove-based vibration module with a camera-mounted white cane, forming a modular system that delivers discreet and intuitive navigation guidance. The glove translates visual data captured by the camera into tactile feedback through a structured motor array, allowing users to sense direction and obstacles in real time. This configuration minimizes auditory overload, preserves user privacy, and enhances autonomy by letting individuals control when and how feedback is received. The result is a lightweight, ergonomic, and socially acceptable assistive device that merges familiar tools with emerging technology—enabling navigation that feels both natural and empowering.

Results and Matrics

Participants correctly identified 94.4%–100% of the 36 vibration signals from memory.

High recognition accuracy ensured reliable tactile understanding.

All 6 participants reported the feedback was easy to understand and consistently helped them locate curbstones.

Clear vibration patterns reduced confusion in real-world navigation.

Live images were successfully translated into motor signals, providing immediate, directional feedback during walking tasks.

Real-time camera-to-vibration mapping enabled confident mobility.

5 of 6 participants walked equal or faster, with improvements up to 27.6% faster on device-assisted routes.

Participants completed walking tasks more efficiently with the device.

Negative / Challenges

- Improve the functonality of the Three participants reported that the device occasionally failed to provide feedback in crowded environments.

- Balancing inclusivity vs. affordability for different populations was ongoing.

Reflections and Learnings

Designing for Comfort and Dignity

Effective assistive design goes beyond solving functional problems. This project taught me that true accessibility means prioritizing user comfort, autonomy, and dignity by ensuring that technology supports users without drawing unwanted attention or compromising their sense of self.

Co-Creating with Users Leads to Better Solutions

The iterative workshops and field tests revealed that involving visually impaired users early and often leads to more meaningful insights. Their lived experiences shaped the system’s functionality, ultimately resulting in a tool that aligns with real-world navigation habits and emotional needs.

Next steps

Enhance performance in crowded scenarios

Further refine the vision model to better distinguish curbstones from pedestrians and dynamic obstacles in busy environments.

Develop alternative guidance cues when curbs are absent.

Explore additional environmental references—such as ground texture, object edges, or distance mapping—to provide reliable feedback even without curb boundaries.

Quick Access

The Quick Access section includes shortcuts to Schedule Rides and Recent Rides in one click.

Tracking Bar

Handy tab displayed on the homepage that allows users to access their ongoing trip in one click.

WanderPal

Over 1.1 billion people worldwide were living with some form of visual impairment, including 43 million who were completely blind. These individuals face significant challenges in independent mobility and social participation. This project explores how to create a human AI collaboration wearable assistive system that not only helps with navigation but also empowers blind users to maintain independence, dignity, and confidence in complex, real-world environments.

Role / Skills

Human-Centered AI

Product/UX Design

User Research

Human AI Collaboration

Computer Vision

Interdisplinary Collaboration

Team

1 Designer

1 Software

2 Hardware

Timeline

1 months for research, 1 month for MVP, with 2 months of continued iteration

Tools Used

Figma

Fusion 360

Yolov8

The big problem

Blind and visually impaired individuals struggle with safe, independent navigation due to noisy, privacy-risking, and non-adaptive tools, leading to reduced confidence and social exclusion.

Limited independence when navigating unfamiliar or complex environments.

Over-reliance on auditory navigation tools, which can overload users in noisy environments.

Privacy concerns with camera-based systems that unintentionally capture faces or sensitive surroundings.

Existing devices often lack adaptability to users’ personal preferences or grip styles with canes.

As a result, many users report reduced confidence, safety concerns, and social exclusion.

Our Goal

The goal was to design an assistive device that:

Create a transportation system that:

- Reliably detects navigation markers (e.g., curbs, crosswalks).

- Provides intuitive, discreet feedback without increasing cognitive load.

- Maintains lightweight, modular design suitable for integration with the white cane or wearable accessories.

Contextual Research

Auditory Simulation Study

I developed an immersive audio-based simulation to observe how blind participants interacted with environmental cues. The participants could choose when to request navigation prompts, revealing preferences for autonomy and selective assistance.

Key Findings:

Users valued existing environmental cue including traffic lights as guide for direction.

visually impaired community really relied on existing navigation tools for dialy

Design Strategies

Provide reliable navigation markers rather than step-by-step directions.

Address privacy by restricting cameras to ground-level targets.

Give users control over when and how feedback is delivered.

Tactile Feedback

Reduces auditory overload in noisy environments.

Provides immediate, intuitive cues (e.g., directional vibrations).

Offers discreet interaction, preserving user privacy and dignity in public.

Solution

We propose an assistive device solution that uses a camera to detect navigation markers and werable deliver real-time tactile feedback. By emphasizing reliable cues over step-by-step instructions, it reduces auditory overload and enables blind users to navigate with greater independence and confidence.

Challenge 1: Flexibility with Safty anf

Reliable ground-level detection paired with tactile feedback enables confident, independent travel without overwhelming users.

We overlaid a grid on the camera feed and mapped each zone to a vibration motor. When a navigation target appeared, the corresponding motor vibrated, creating a direct spatial link between visual cues and tactile feedback.

The motors corresponding to the orange area vibrate

7mm

2mm

Why Tactile Feedback?

We chose tactile feedback because it introduces an underutilized sensory channel into human–computer interaction. While vision and hearing dominate assistive technologies, touch offers a discreet, intuitive, and immediate way to convey spatial information. For blind users, it reduces auditory strain in noisy environments, protects privacy compared to voice prompts, and provides real-time cues for quick decision-making.

Future Improvement Directions

From the first prototype, we learned that clarity and comfort are critical—vibrations must be distinct, well-positioned, and adaptable to individual users. These insights point to the need for a more flexible and modular system, where the glove and cane can be adjusted for different grip styles, hand sizes, and personal preferences. This evolution led us to design a modular glove-cane system that empowers users with greater control over how and when feedback is delivered.

Solution

To provide discreet and intuitive guidance, I focused on developing a vibration module that could deliver clear cues without adding to the auditory load that blind users already manage in daily navigation. Early attempts to embed a vibration grid into the cane handle proved confusing, as signals shifted with grip variations and alignment. Moving the module to the back of the hand through a glove revealed a more natural solution: vibrations pressed directly against the skin offered immediate, private, and easily interpretable feedback. Iterations with fabric fit, array boundaries, and structural supports led to a final system that pairs a cane-mounted camera for environmental detection with a glove-based motor array for tactile feedback. This discreet combination allows users to receive navigation cues in real time without disrupting their reliance on auditory landmarks, balancing clarity with privacy to create a more intuitive and socially acceptable form of guidance.

Challenge 1: Flexibility with Safty anf

Tactile feedback replaces constant audio prompts, reducing cognitive load and preserving awareness in noisy environments.

Cane Handle Integration

Problems Identified:

- A 6×6 vibration grid aligns at an angle to the cane, confusing curb cues.

- Palm alignment doesn’t accommodate both vertical and diagonal grip styles.

- Uneven handle surface makes module installation difficult.

Insights:

- Feedback placement must adapt to different cane grip methods.

- Direct integration into the cane limits flexibility and can introduce misalignment issues.

- Users need consistent tactile orientation that doesn’t depend on how they hold the cane.

Outcome: Cane-handle integration was abandoned. Shifted toward a wearable (glove) solution for clearer, more flexible tactile delivery.

Improve motor-to-handback fit

Change motor–hand contact from surface to point

Integrate the motors into the fabric through weaving

Weave the motors into the fabric

Conclusion: Ensuring the motors fit snugly against the back of the hand is key to improving the clarity of vibration perception; the fabric between the motors and the hand should be thinner.

Next Steps:

- Improve the glove’s fit with the back of the hand so it can adapt to most hand sizes.

- Provide perceptible boundaries for the motor array.

Clarity of Motor Array Feedback

Findings:

- Motors must fit snugly against the back of the hand; thinner fabric improves clarity.

Problems:

- Signals unclear with loose fit or thick material.

Insights: Physical contact quality (fit + material) is as important as motor strength.

Exploration 2: How can the glove fit closely to the back of the hand?

Problems:

- The glove cannot adapt to different hand sizes (on smaller hands it feels loose).

- It is difficult to clearly determine which motor is vibrating.

- Users cannot easily identify the boundaries of the motor array.

Loose fit on smaller hands, unclear motor boundaries, difficult to distinguish which motor vibrates.

Insights:

- Elastic fabric alone cannot solve fit variation — structure is needed.

- Users rely on perceivable boundaries to map vibration locations.

- Placement and ergonomics strongly affect interpretability of feedback.

Outcome: → Established that glove-based wearables deliver more intuitive feedback than cane-handle integration. Key lesson: ergonomic adaptability and clear motor boundaries are critical to effective tactile feedback.

Challenge 1: Flexibility with Safty anf

A modular glove-cane system lets users control how and when feedback is delivered, supporting diverse grip styles and preferences.

Cane Handle Intergration

Add a rigid frame and use a custom PCB.

Final Version

Design:

- Glove with vibration motor array for tactile feedback.

- Camera module attached to cane to detect navigation cues.

Problems with Final Prototype:

- Rigid PCB support structure reduced comfort and adaptability.

- Not waterproof, limiting outdoor usability.

Insights:

- Modularity (separating sensing from feedback) provides flexibility and clarity.

- Outdoor usability requires robustness (waterproofing, stronger structures).

- Combining familiar tools (white cane) with wearable tech lowers learning curve and preserves user independence.

Outcome: → Final solution combines glove + cane-mounted camera, providing discreet tactile guidance while maintaining independence.

Final Version

The final prototype integrates a glove-based vibration module with a camera-mounted white cane, forming a modular system that delivers discreet and intuitive navigation guidance. The glove translates visual data captured by the camera into tactile feedback through a structured motor array, allowing users to sense direction and obstacles in real time. This configuration minimizes auditory overload, preserves user privacy, and enhances autonomy by letting individuals control when and how feedback is received. The result is a lightweight, ergonomic, and socially acceptable assistive device that merges familiar tools with emerging technology—enabling navigation that feels both natural and empowering.

Results and Matrics

Participants correctly identified 94.4%–100% of the 36 vibration signals from memory.

High recognition accuracy ensured reliable tactile understanding.

All 6 participants reported the feedback was easy to understand and consistently helped them locate curbstones.

Clear vibration patterns reduced confusion in real-world navigation.

Live images were successfully translated into motor signals, providing immediate, directional feedback during walking tasks.

Real-time camera-to-vibration mapping enabled confident mobility.

5 of 6 participants walked equal or faster, with improvements up to 27.6% faster on device-assisted routes.

Participants completed walking tasks more efficiently with the device.

Negative / Challenges

- Improve the functonality of the Three participants reported that the device occasionally failed to provide feedback in crowded environments.

- Balancing inclusivity vs. affordability for different populations was ongoing.

Reflections and Learnings

Designing for Comfort and Dignity

Effective assistive design goes beyond solving functional problems. This project taught me that true accessibility means prioritizing user comfort, autonomy, and dignity by ensuring that technology supports users without drawing unwanted attention or compromising their sense of self.

Co-Creating with Users Leads to Better Solutions

The iterative workshops and field tests revealed that involving visually impaired users early and often leads to more meaningful insights. Their lived experiences shaped the system’s functionality, ultimately resulting in a tool that aligns with real-world navigation habits and emotional needs.

Next steps

Enhance performance in crowded scenarios

Further refine the vision model to better distinguish curbstones from pedestrians and dynamic obstacles in busy environments.

Develop alternative guidance cues when curbs are absent.

Explore additional environmental references—such as ground texture, object edges, or distance mapping—to provide reliable feedback even without curb boundaries.

Quick Access

The Quick Access section includes shortcuts to Schedule Rides and Recent Rides in one click.

Tracking Bar

Handy tab displayed on the homepage that allows users to access their ongoing trip in one click.

WanderPal

Over 1.1 billion people worldwide were living with some form of visual impairment, including 43 million who were completely blind. These individuals face significant challenges in independent mobility and social participation. This project explores how to create a human AI collaboration wearable assistive system that not only helps with navigation but also empowers blind users to maintain independence, dignity, and confidence in complex, real-world environments.

Role / Skills

Human-Centered AI

Product/UX Design

User Research

Human AI Collaboration

Computer Vision

Interdisciplinary Collaboration

Team

1 Designer

1 Software

2 Hardware

Timeline

1 months for research, 1 month for MVP, with 2 months of continued iteration

Tools Used

Figma

Fusion 360

Yolov8

The big problem

Blind and visually impaired individuals struggle with safe, independent navigation due to noisy, privacy-risking, and non-adaptive tools, leading to reduced confidence and social exclusion.

Limited independence when navigating unfamiliar or complex environments.

Over-reliance on auditory navigation tools, which can overload users in noisy environments.

Privacy concerns with camera-based systems that unintentionally capture faces or sensitive surroundings.

Existing devices often lack adaptability to users’ personal preferences or grip styles with canes.

As a result, many users report reduced confidence, safety concerns, and social exclusion.

Our Goal

The goal was to design an assistive device that:

- Reliably detects navigation markers (e.g., curbs, crosswalks).

- Provides intuitive, discreet feedback without increasing cognitive load.

- Maintains lightweight, modular design suitable for integration with the white cane or wearable accessories.

Contextual Research

Auditory Simulation Study

I developed an immersive audio-based simulation to observe how blind participants interacted with environmental cues. The participants could choose when to request navigation prompts, revealing preferences for autonomy and selective assistance.

Key Findings:

Users valued existing environmental cue including traffic lights as guide for direction.

visually impaired community really relied on existing navigation tools for daily travel like various GPS system.

Design Strategies

Provide reliable navigation markers rather than step-by-step directions.

Address privacy by restricting cameras to ground-level targets.

Give users control over when and how feedback is delivered.

Tactile Feedback

Reduces auditory overload in noisy environments.

Provides immediate, intuitive cues (e.g., directional vibrations).

Offers discreet interaction, preserving user privacy and dignity in public.

Solution

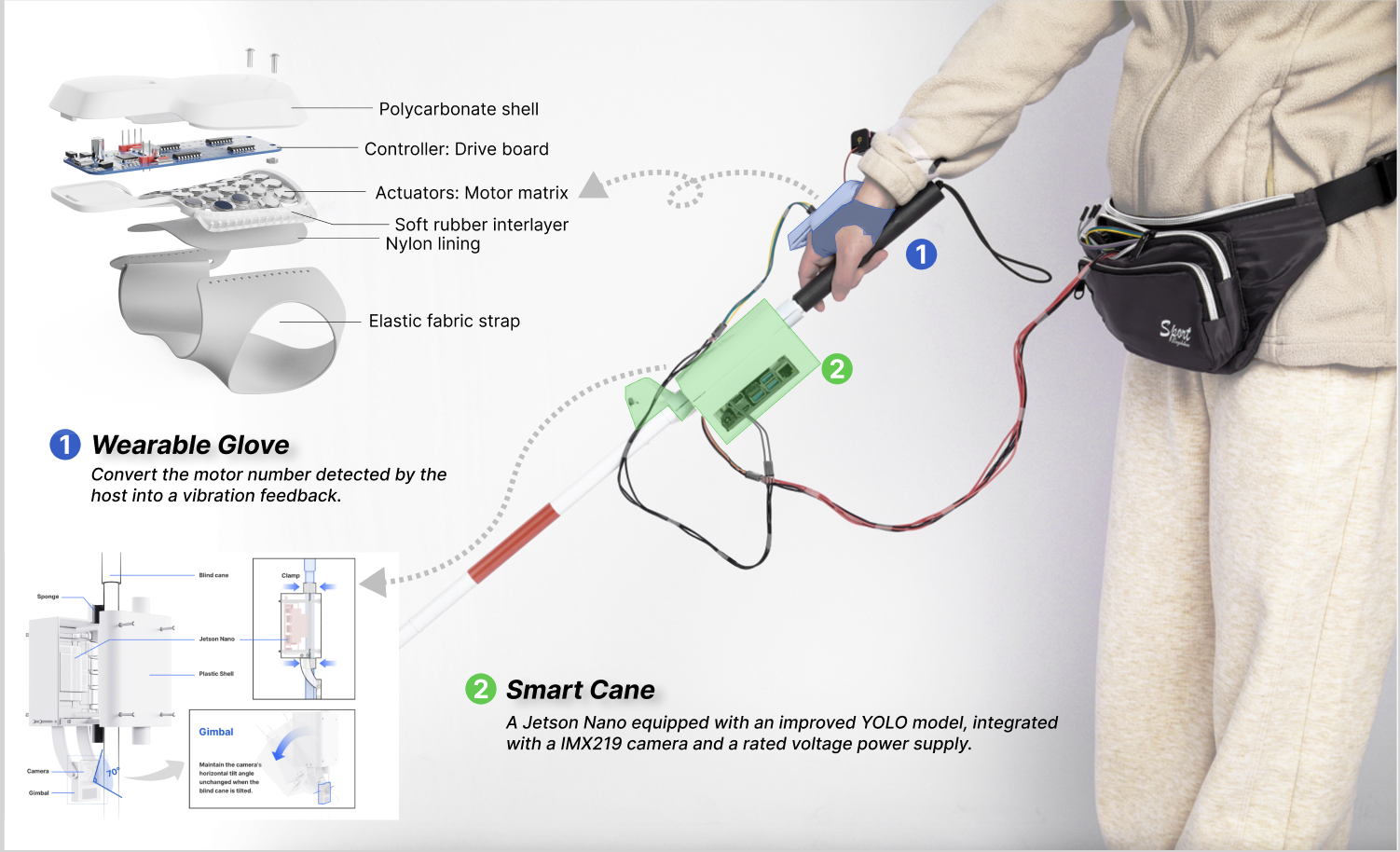

We propose an assistive device solution that uses a camera to detect navigation markers and a wearable to deliver real-time tactile feedback. Since the white cane is widely used, the device can be integrated with it, combining a camera for image capture and a vibration module for feedback into a unified design. By emphasizing reliable cues over step-by-step instructions, it reduces auditory overload and enables blind users to navigate with greater independence and confidence.

Challenge 1

Empowering and Intuitive Navigation

Reliable ground-level detection paired with tactile feedback enables confident, independent travel without overwhelming users.

We overlaid a grid on the camera feed and mapped each zone to a vibration motor. When a navigation target appeared, the corresponding motor vibrated, creating a direct spatial link between visual cues and tactile feedback.

The motors corresponding to the orange area vibrate

7mm

2mm

Why Tactile Feedback?

We chose tactile feedback because it introduces an underutilized sensory channel into human–computer interaction. While vision and hearing dominate assistive technologies, touch offers a discreet, intuitive, and immediate way to convey spatial information. For blind users, it reduces auditory strain in noisy environments, protects privacy compared to voice prompts, and provides real-time cues for quick decision-making.

Future Improvement Directions

From the first prototype, we learned that clarity and comfort are critical—vibrations must be distinct, well-positioned, and adaptable to individual users. These insights point to the need for a more flexible and modular system, where the glove and cane can be adjusted for different grip styles, hand sizes, and personal preferences. This evolution led us to design a modular glove-cane system that empowers users with greater control over how and when feedback is delivered.

Solution

To provide discreet and intuitive guidance, I focused on developing a vibration module that could deliver clear cues without adding to the auditory load that blind users already manage in daily navigation. Early attempts to embed a vibration grid into the cane handle proved confusing, as signals shifted with grip variations and alignment. Moving the module to the back of the hand through a glove revealed a more natural solution: vibrations pressed directly against the skin offered immediate, private, and easily interpretable feedback. Iterations with fabric fit, array boundaries, and structural supports led to a final system that pairs a cane-mounted camera for environmental detection with a glove-based motor array for tactile feedback. This discreet combination allows users to receive navigation cues in real time without disrupting their reliance on auditory landmarks, balancing clarity with privacy to create a more intuitive and socially acceptable form of guidance.

Challenge 2

Discreet and Intuitive Guidance

Tactile feedback replaces constant audio prompts, reducing cognitive load and preserving awareness in noisy environments.

Cane Handle Integration

Problems Identified:

- A 6×6 vibration grid aligns at an angle to the cane, confusing curb cues.

- Palm alignment doesn’t accommodate both vertical and diagonal grip styles.

- Uneven handle surface makes module installation difficult.

Insights:

- Feedback placement must adapt to different cane grip methods.

- Direct integration into the cane limits flexibility and can introduce misalignment issues.

- Users need consistent tactile orientation that doesn’t depend on how they hold the cane.

Outcome: Cane-handle integration was abandoned. Shifted toward a wearable (glove) solution for clearer, more flexible tactile delivery.

Improve motor-to-handback fit

Change motor–hand contact from surface to point

Integrate the motors into the fabric through weaving

Weave the motors into the fabric

Conclusion: Ensuring the motors fit snugly against the back of the hand is key to improving the clarity of vibration perception; the fabric between the motors and the hand should be thinner.

Next Steps:

- Improve the glove’s fit with the back of the hand so it can adapt to most hand sizes.

- Provide perceptible boundaries for the motor array.

Clarity of Motor Array Feedback

Findings:

- Motors must fit snugly against the back of the hand; thinner fabric improves clarity.

Problems:

- Signals unclear with loose fit or thick material.

Insights: Physical contact quality (fit + material) is as important as motor strength.

Exploration 2: How can the glove fit closely to the back of the hand?

Problems:

- The glove cannot adapt to different hand sizes (on smaller hands it feels loose).

- It is difficult to clearly determine which motor is vibrating.

- Users cannot easily identify the boundaries of the motor array.

Loose fit on smaller hands, unclear motor boundaries, difficult to distinguish which motor vibrates.

Insights:

- Elastic fabric alone cannot solve fit variation — structure is needed.

- Users rely on perceivable boundaries to map vibration locations.

- Placement and ergonomics strongly affect interpretability of feedback.

Outcome: → Established that glove-based wearables deliver more intuitive feedback than cane-handle integration. Key lesson: ergonomic adaptability and clear motor boundaries are critical to effective tactile feedback.

Final Version

Glove + White Cane with Camera Module

A modular glove-cane system lets users control how and when feedback is delivered, supporting diverse grip styles and preferences.

Cane Handle Integration

Add a rigid frame and use a custom PCB.

Final Version

Design:

- Glove with vibration motor array for tactile feedback.

- Camera module attached to cane to detect navigation cues.

Problems with Final Prototype:

- Rigid PCB support structure reduced comfort and adaptability.

- Not waterproof, limiting outdoor usability.

Insights:

- Modularity (separating sensing from feedback) provides flexibility and clarity.

- Outdoor usability requires robustness (waterproofing, stronger structures).

- Combining familiar tools (white cane) with wearable tech lowers learning curve and preserves user independence.

Outcome: → Final solution combines glove + cane-mounted camera, providing discreet tactile guidance while maintaining independence.

Final Version

The final prototype integrates a glove-based vibration module with a camera-mounted white cane, forming a modular system that delivers discreet and intuitive navigation guidance. The glove translates visual data captured by the camera into tactile feedback through a structured motor array, allowing users to sense direction and obstacles in real time. This configuration minimizes auditory overload, preserves user privacy, and enhances autonomy by letting individuals control when and how feedback is received. The result is a lightweight, ergonomic, and socially acceptable assistive device that merges familiar tools with emerging technology—enabling navigation that feels both natural and empowering.

Results and Matrics

Participants correctly identified 94.4%–100% of the 36 vibration signals from memory.

High recognition accuracy ensured reliable tactile understanding.

All 6 participants reported the feedback was easy to understand and consistently helped them locate curbstones.

Clear vibration patterns reduced confusion in real-world navigation.

Live images were successfully translated into motor signals, providing immediate, directional feedback during walking tasks.

Real-time camera-to-vibration mapping enabled confident mobility.

5 of 6 participants walked equal or faster, with improvements up to 27.6% faster on device-assisted routes.

Participants completed walking tasks more efficiently with the device.

Negative / Challenges

- Improve the functionality of the device in crowded environments when many pedestrians are walking along the curbside.

- Incorporation of more flexible interaction scheme including an alternative reference information while curbside is missing to adapt the complicated real world environment.

Reflections and Learnings

Designing for Comfort and Dignity

Effective assistive design goes beyond solving functional problems. This project taught me that true accessibility means prioritizing user comfort, autonomy, and dignity by ensuring that technology supports users without drawing unwanted attention or compromising their sense of self.

Co-Creating with Users Leads to Better Solutions

The iterative workshops and field tests revealed that involving visually impaired users early and often leads to more meaningful insights. Their lived experiences shaped the system’s functionality, ultimately resulting in a tool that aligns with real-world navigation habits and emotional needs.

Next steps

Enhance performance in crowded scenarios

Further refine the vision model to better distinguish curbstones from pedestrians and dynamic obstacles in busy environments.

Develop alternative guidance cues when curbs are absent.

Explore additional environmental references—such as ground texture, object edges, or distance mapping—to provide reliable feedback even without curb boundaries.